What Are Agentic AI Frameworks?

Agentic AI frameworks are sets of rules, decision gates, and task loops that guardrail Large Language Models (LLMs).

They broaden their capabilities and push them into required directions. They dictate the overall direction and scope of behavior profile turning solutions like ChatGPT or Claude into goal-driven digital workers. They are focused on routing, governance, monitoring, and integration with APIs or apps.

While AI agents aren’t fully autonomous yet, frameworks help them handle planning, tool calls, memory, safety, and orchestration correctly – but most importantly as expected.

In short:

The Agentic AI framework is the operational architecture that defines and guides the scope and autonomy of the digital workforce.

Moreover, agentic AI frameworks transcend the capabilities of reactive, prompt-requiring models. If you’d like to learn more about it feel free to read Agentic AI Tools and Platforms Overview >

Agentic AI Layers

Here’s a quick break down of modern AI layers:

- AI tools are specific applications or software agents designed to perform discrete tasks.

- AI workflows refer to the orchestration and sequence of automated steps. This moves beyond a single task to automating a chain of events, often handling the “handoffs” between different systems or people.

- AI frameworks is the broadest term here. They represent the underlying infrastructure which allows tools and workflows to operate securely and scalably. This is the “foundation” that prevents a disjointed “Frankenstack” of incompatible tools.

In short:

AI Tools operate within an AI Workflow, which is built upon an AI Framework.

Simple RAG vs. Agentic RAG

RAG (Retrieval-Augmented Generation) is one of the most basic terms in the framework realm.

Fundamentally, it is the architectural pattern that gives an AI access to your private data (like PDFs) so it doesn’t rely solely on public training data.

However, the industry is now evolving from Simple RAG, which blindly fetches documents once like a search engine, to Agentic RAG, which acts like a researcher – actively reasoning, re-querying, and verifying facts until the answer is correct.

Distinction between traditional Retrieval-Augmented Generation (RAG) and its advanced form, Agentic RAG is crucial in 2025.

Here’s a graph illustrating the operational steps of agentic RAG:

Distinction between traditional Retrieval-Augmented Generation (RAG) and its advanced form, Agentic RAG is crucial in 2025.

Here’s a short differentiation:

- Simple RAG operates via a static loop – the system receives a query, then fetches a document, and generates an answer. It lacks self-correction.

- Agentic RAG acts like a researcher – It employs an autonomous reasoning layer to analyze initial results, rewrite queries if the data is insufficient, and cross-reference multiple sources.

Since we explained all of the differentiations we can explore top 3 Agentic AI frameworks.

Top 3 Agentic AI Frameworks | 2025

While there are many agentic AI frameworks used across the world, there are some that dominate in the West.

Market data and developer adoption metrics indicate that 3 agentic ai frameworks rule in the Python ecosystem today.

They are LangChain, AutoGen and CrewAI.

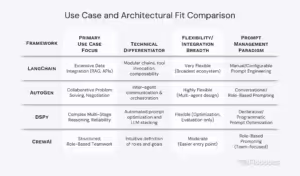

LangChain is the definitive “go-to framework” used to connect LLMs with the external world.

It is built around “chains” of reasoning, which allow developers to break down large tasks into smaller, more manageable steps.

LangChain is a standard for integrating LLMs with external knowledge sources, APIs, and reasoning logic. It’s modular design makes LangChain highly effective for applications requiring complex, segmented workflows, such as financial risk modeling.

LangChain’s most significant competitive advantage is its unrivaled interoperability.

The framework offers the most extensive ecosystem available, boasting over 1000 integrations across the entire GenAI stack, including various LLM providers, vector databases, document loaders, and deployment platforms.

This ensures high flexibility and adaptability for production-grade systems.

It is particularly evident in its extensive enterprise tool integrations.

For example, LangChain facilitates deep interaction with Salesforce CRM, allowing developers to execute complex Salesforce Object Query Language (SOQL) queries and perform CRUD (Create, Read, Update, Delete) operations on Salesforce objects directly through the framework.

LangChain has also been used by HubSpot, BigQuery, Slack, Notion, Jira, and many more.

As applications increase in complexity, LangChain often necessitates significant developer time dedicated to manual prompt engineering and the configuration of intricate chains.

While it offers unparalleled flexibility among AI frameworks, it also comes with a price – high scaling costs.

High demand for low-level prompt expertise creates an architectural tension that justifies the existence of specialized, optimization-focused alternatives. Moreover, LangChain’s extensive adoption is validated by specific, named enterprise success stories:

- Dun & Bradstreet: Leveraged LangChain to build the ChatD&B assistant, specifically for automating the explanation of complex credit-risk reasoning.

- WEBTOON Entertainment: Employs the graph-based extension, LangGraph, to manage sophisticated visual-text content workflows

However, traditional linear chains often prove inadequate for production-grade AI agents that require stateful memory and iterative decision-making. All of the aforementioned architectural constraints led to the necessary introduction of LangGraph.

LangGraph is an extension that utilizes a graph-based approach to construct stateful, resilient, and inspectable multi-agent pipelines.

the market is moving from basic LLM wrappers toward explicit control over multi-step, iterative execution.

AutoGen is engineered specifically for building sophisticated multi-agent ecosystems where several specialized agents can communicate, debate, and collaborate to achieve a goal, mirroring human team dynamics.

This capability is highly suited for applications such as collaborative problem-solving, negotiation, and brainstorming.

AutoGen has demonstrated significant potential in pilot tests, where multi-agent systems reportedly outperformed human teams in tasks such as report generation and code debugging, largely due to built-in self-correction and negotiation features.

AutoGen’s modular, conversational usage patterns successfully lower the entry barrier for organizations experimenting with multi-agent workflows.

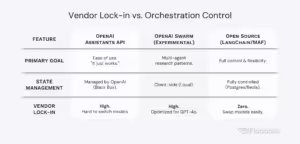

In recognition of the market’s need for a unified, robust standard, Microsoft is currently consolidating AutoGen (multi-agent orchestration) and Semantic Kernel (enterprise-grade features) into the Microsoft Agent Framework.

Microsoft Agent Ecosystem (MAE)

MAE it the default choice for enterprises on the Azure stack.

Use MAF to write the code (connecting to local data or specialized logic) and Azure AI Agent Service to run it (handling scaling, auth, and history).

Microsoft’s strategy has evolved from two separate libraries into a unified “Layer Cake” for enterprise agents:

For years, developers had to choose between Semantic Kernel (great for single-agent enterprise integration) and AutoGen (great for multi-agent conversations). In late 2024, Microsoft unified these into the Microsoft Agent Framework (MAF).

MAF is the “Best of Both Worlds” SDK. It takes the multi-agent orchestration patterns from AutoGen (agents talking to agents) and rebuilds them on the robust, type-safe foundations of Semantic Kernel.

It introduces “Agent-to-Agent” (A2A) protocols. They’re responsible for agents built in Python that collaborate with an agent built in C# or Java.

Azure AI Agent Service

While MAF is the code, the Azure AI Agent Service is the infrastructure. It solves the “Day 2” problems of running agents in production:

- State Persistence – Automatically saves conversation history so you don’t have to manage databases.

- Enterprise Security – Uses Entra ID (formerly Azure AD) to ensure agents only access data they are authorized to see.

- Scalability – Runs on serverless infrastructure, so you don’t need to manage GPU clusters for your agent orchestration layer.

This unified successor provides critical enterprise-grade features, including robust thread-based state management, type safety, telemetry collection, filters, and explicit, graph-based workflows. These features are essential for granting developers explicit control over multi-agent execution paths in long-running or human-in-the-loop scenarios.

Crucially, Microsoft has prioritized the solution to AI scaling and governance challenges with the introduction of Microsoft Agent 365, defining it as the “control plane for AI agents”.

Given IDC’s prediction of 1.3 billion agents by 2028, enterprises require robust infrastructure to manage and govern this massive scale securely.

Agent 365 addresses the primary concerns of IT leaders–sprawl, security, and compliance–by integrating agent management into the trusted Microsoft 365 Admin Center. This includes providing:

A singular, comprehensive inventory of all agents in the organization, preventing “agent sprawl.”

- Access Control – Leveraging Microsoft Entra ID for secure management and access.

- Unified Observability – Delivering telemetry, dashboards, and alerts across the entire agent fleet.

This strategic focus on governance and security demonstrates that Microsoft is addressing the C-suite’s biggest concern, ensuring the framework can transition successfully from developmental proof-of-concept to controlled, global enterprise deployment.

How to Choose Agentic AI Framework?

The selection process for an agentic framework must be guided by technical compatibility, organizational fit, and long-term sustainability. Enterprise criteria that must be considered systematically include:

- Scalability and Flexibility – The ability to support growing complexity and integrate custom components is vital. LangChain, with its vast ecosystem, is often cited as the most flexible, while AutoGen offers significant flexibility in designing multi-agent interactions.

- Integration with Existing Systems – Compatibility with core business logic and enterprise resource planning (ERP) systems is non-negotiable.Frameworks must support seamless interfacing with APIs and databases, exemplified by LangChain’s ability to integrate with complex platforms like Salesforce.

- Transparency and Explainability – For regulated industries or complex workflows, the agent’s execution must be traceable and inspectable, enabling debugging and regulatory compliance.

- Security and Compliance – Production deployments require stringent adherence to security protocols and data compliance standards.

- Cloud Infrastructure Fit – Organizations with significant cloud investment often benefit from native integrations. For example, Amazon Bedrock Agents offer the strongest native AWS integration and a fully managed deployment model, contrasting with the Do-It-Yourself (DIY) model common to AutoGen and CrewAI.

The CLEAR Standard

The CLEAR Framework (Cost, Latency, Efficacy, Assurance, Reliability), is a holistic evaluation standard specifically designed for assessing enterprise agent performance across five critical dimensions.

The dimension of Cost is often highly overlooked, yet paramount for Return on Investment (ROI).

Experimental evaluation has demonstrated that optimizing agents solely for accuracy (Efficacy) can result in systems that are up to x10 more expensive than cost-aware alternatives that achieve comparable task success rates.

Therefore, enterprise deployment requires strategic, multi-objective optimization that balances performance against operational expense.

The analysis of Latency reveals significant architectural challenges, particularly in web-interactive agents. Empirical findings demonstrate that the LLM API and the external web environment both contribute substantially to the total runtime.

Specifically, the web environment latency–involving network fetching and HTML parsing–accounts for as much as 53.7% of the total execution time of a web-based agentic system.

This significant contribution from non-LLM components proves that optimizing the core model or prompt is insufficient for production scaling.

To mitigate this web environment overhead, specialized infrastructure solutions are required. The proposed technical solution is SpecCache, a caching framework augmented with speculative execution. It is an architectural optimization technique, not a downloadable framework like LangChain.

SpecCache is designed to minimize network and parsing delays, reducing web environment overhead by up to x3 and improving the cache hit rate by up to x58 compared to random caching strategies.

This highlights that latency reduction necessitates infrastructure-level intervention, moving beyond framework-specific tuning.

Governance, Security, and Compliance

The sheer scale of anticipated agent deployment–with 1.3 billion agents predicted by 2028 – creates an urgent need for centralized governance. Uncontrolled growth leads to the “agent sprawl problem,” posing severe security and compliance risks.

The solution lies in implementing a unified control plane for managing autonomous systems. Frameworks like Microsoft Agent 365 directly address this gap by providing essential enterprise management capabilities:

- Registry and Access Control – Agent 365 provides a single source of truth for all agents, eliminating blind spots and leveraging Microsoft Entra ID for secure access control and agent identification.

- Security and Unified Observability – It enables IT leaders to monitor and govern the entire agent fleet through unified telemetry and alerts, ensuring compliance and reducing organizational risk.

For large-scale organizations, the most robust strategy often involves a hybrid approach, leveraging the technical specialization of multiple frameworks (e.g., LangChain for integration, DSPy for reliability) while centralizing security, compliance, and management under a unified governance layer.

The “TL;DR” Decision Matrix:

- For the “Microsoft Shop” Enterprise – Microsoft Agent Ecosystem (MAF + Azure) is the undisputed winner. It offers the tightest security, unified observability, and a seamless path from code (Semantic Kernel) to cloud (Azure Agent Service).

- For Complex, Agnostic Orchestration – LangChain & LangGraph remain the industry heavyweights. If you need to chain 5 different models, connect to Salesforce, and manage complex loops, this is your toolkit—provided you are willing to manage the infrastructure.

- For “Code-First” Engineering Teams – PydanticAI is the rebellion against complexity. It is the best choice for senior Python teams who want type safety, reliability, and zero “magic” abstractions.

- For Data-Intensive & Financial Agents – Phidata and LlamaIndex dominate. Use them when your agent’s primary job is to read charts, parse messy PDFs, and output structured SQL or JSON data.

- For Future-Proofing Connectivity – Model Context Protocol (MCP) is non-negotiable. Regardless of which framework you choose, ensuring your data layer is MCP-compliant guarantees you won’t be locked into a single vendor.

Agentic AI Frameworks Examples

DSPy and LlamaIndex represent critical areas of specialization–LLM optimization and data orchestration–that often separate professional engineering solutions from experimental prototypes.

Here’s why:

DSPy (Declarative Self-improving Language Programs) introduces a fundamental shift away from manual, low-level prompt engineering.

It employs a declarative paradigm, allowing developers to specify the desired high-level logic (the task and the data flow) while the framework systematically handles the complexities of prompt optimization and few-shot example selection automatically.

The imperative for this specialized approach is rooted in the instability and difficulty of scaling manually tuned prompts. In complex multi-stage reasoning pipelines, involving

stacked LLM calls and multi-hop logic, manual configuration becomes an untenable bottleneck.

DSPy’s built-in optimizers transform prompt tuning from an unstable craft into a scalable engineering discipline, resulting in higher reliability and consistency.

DSPy is uniquely suited for specific project types:

- Complex Multi-Step Reasoning: It excels in projects involving many chained LLM calls, where its modular design and automated tuning create more reliable pipelines.

- High Reliability Needs: For building robust conversational agents or complex information retrieval systems that require consistent, contextually accurate outputs, DSPy’s systematic optimization ensures a higher degree of robustness compared to traditional approaches.

Architecturally, LangChain and DSPy serve complementary purposes. LangChain is the superior choice for breadth–integrating diverse data sources and APIs. DSPy, conversely, is superior for depth–optimizing the consistency, accuracy, and reliability of LLM outputs across complex reasoning steps.

The rapid adoption and significant growth of DSPy’s community (16K GitHub stars by late 24) provides strong evidence that the market recognizes the need for automated optimization solutions in production-grade systems.

LlamaIndex is the specialized open-source data orchestration framework that accelerates time-to-production for Generative AI applications requiring trusted, data-intensive agentic workflows.

Its core strength lies fundamentally in advanced Retrieval-Augmented Generation (RAG) capabilities, focusing on data integration, indexing, and retrieval.

The most frequent point of failure in enterprise GenAI/RAG deployments is not the LLM or the prompt, but the initial process of transforming complex, unstructured data into AI-ready formats. Enterprise data often resides in messy documents–complex PDFs, embedded images, nested tables, and handwritten notes LlamaIndex addresses this bottleneck directly through its commercial tools:

For orchestration, LlamaIndex offers the Workflows engine.

It is an event-driven, async-first engine that provides developers with granular control over stateful, multi-step AI processes. Workflows allow for complex operations such as looping, parallel path execution, and managed state maintenance.

The asynchronous-first nature of Workflows is optimized for modern Python web applications, enabling highly efficient parallel execution and accelerating the time-to-production for GenAI applications.

LlamaIndex’s authority in data-intensive environments is demonstrated by its

successful deployment in critical sectors:

- LlamaCloud and LlamaParse – These components deliver industry-leading document parsing and extraction ca

- Carlyle (Private Equity) – Uses LlamaParse for high-accuracy financial document analysis, investment research, and due diligence.

- KPMG – Utilizes LlamaIndex to provide essential context for data processing and analysis, thereby enhancing AI capabilities and delivering more accurate client insights.

By dominating the high-accuracy data parsing and indexing segment, LlamaIndex has carved out a unique and necessary niche as the default framework for data-centric agentic applications.

phidata

Phidata is a specialized framework designed for building Multi-Modal Agents with long-term memory. Unlike LangChain (which is general-purpose), Phidata focuses heavily on “function calling” and structured data outputs.

The “go-to” framework for financial analysts and data teams.

While other frameworks struggle to output clean JSON from a PDF, Phidata excels at turning unstructured data (like a video or a messy Excel sheet) into structured database rows. It is frequently used for building “Investment Analyst” agents that can read charts, parse news, and output a structured report.

Why is Phidata a “Hidden Gem”?

Most agent frameworks focus on the reasoning loop (how the agent thinks). Phidata focuses on the memory and data layer (what the agent knows and keeps) capabilities. LlamaParse is explicitly cited as the premier solution for parsing complex documents in Enterprise RAG pipelines, due to its exceptional handling of intricate spatial layouts and nested tables. This specialization ensures data integrity, which is vital for building trustworthy agent-based models.

- Memory as Infrastructure, Not an Afterthought – In LangChain, adding persistent memory (saving a conversation to a database) requires setting up complex chains of commands. In Phidata, it is a single line of code. This makes it incredibly fast to ship stateful agents that remember users across sessions.

- Multi-Modal by Default – Phidata was one of the first frameworks to optimize for Gemini 1.5 Pro and GPT-4o’s vision capabilities. You can drop a video file into a Phidata agent, and it can analyze the visual frames and audio simultaneously—a capability that requires significant “glue code” in other frameworks.

- The “Function Calling” Specialist – Phidata abstracts away the complexity of OpenAI/Anthropic function calling. Instead of writing complex JSON definitions for your tools, you simply pass a Python function. Phidata automatically generates the JSON schema, handles the argument parsing, and returns the result to the LLM.

Agentic AI Frameworks | Lightweight Standards

While frameworks like LangChain and Microsoft MAF aim to be “do-it-all” platforms, a powerful counter-movement emerged in 2025 focusing on simplicity and standardization.

These tools are critical for engineering teams who prefer “code over configuration.”

PydanticAI

PydanticAI is a code-first agent framework.

It rejects complex abstractions (like “Chains”) in favor of standard Python code and type hints. Business use: The top choice for senior Python engineering teams building production agents who want full control and zero “magic.”

In 2024, many teams struggled to debug LangChain’s complex internal abstractions. PydanticAI offers a “what you see is what you get” experience. If you know Python, you know PydanticAI.

It uses Python’s native type system to guarantee that the data your agent outputs matches exactly what your API expects. This prevents the most common source of agent runtime errors.

The Model Context Protocol (MCP)

MCP is an open standard (pioneered by Anthropic) that acts as a “USB-C port” for AI agents. It allows any agent to connect to any data source without writing custom integration code.

Before MCP, connecting 3 AI assistants to 5 different tools required 15 different integrations. With MCP, you build the tool interface once, and Claude, ChatGPT, and your custom MAF agents can all use it instantly.

As of 2025, MCP has become the de-facto standard for data connectivity, supported by major heavyweights including Microsoft, Anthropic, and Replit.

Agentic AI Frameworks | Visuals & Low-Code

Not every agent needs to be written in pure Python.

In fact, a massive segment of the 2025 market – particularly Product Managers, Solution Architects, and “AI Ops” teams – has moved away from IDEs entirely. They rely on visual, drag-and-drop builders to prototype logic before handing it off to engineering, or increasingly, to deploy directly to production.

This shift has given rise to a “Low-Code Rebellion,” where the barrier to entry for building complex reasoning agents is no longer knowing syntax, but understanding system design.

If LangChain is the engine, Langflow is the dashboard. Originally a simple UI wrapper, Langflow has evolved into the industry standard for “Visual Python.”

Its core premise is unique: every node you drag onto the canvas (a PDF loader, a Prompt, a Vector Store) is just a Python function under the hood. This means developers can “eject” to code at any time, eliminating the “black box” fear that plagues most no-code tools.

Following its acquisition by DataStax, Langflow has graduated from a hobbyist tool to an enterprise staple. It is now the fastest way to demo a “Chat with PDF” or RAG prototype to stakeholders without writing a single line of boilerplate.

Flowise

While Langflow excels at data pipelines, Flowise has carved out a niche in logic pipelines.

It is widely favored for more complex, enterprise-grade workflows that involve “If/Else” routing and stateful loops.

Flowise is the tool of choice for “AI Operations” teams. When a business rule changes—for example, “If the customer is a VIP, route to GPT-4; otherwise, use GPT-4o-mini”—an Ops manager can tweak the logic node in Flowise visually, instantly updating the production agent without waiting for a developer to redeploy code.

The most significant disruption in the low-code space in 2025 comes from n8n. Originally a workflow automation tool, n8n pivoted aggressively to become the “Action Layer” of the agentic economy.

Unlike Langflow or Flowise, which focus on reasoning(how the agent thinks), n8n focuses on connectivity (what the agent does). It has become the de-facto “glue” for agents, offering native nodes that allow AI to autonomously control Slack, Email, CRMs, and ERPs.

In short:

n8n is the bridge between an abstract LLM and a concrete business outcome.

In 2025, the most effective teams used Langflow to prototype the brain, n8ns to connect the hands, and Python only when absolute granular control is required.

Stack AI

For organizations that cannot afford the DevOps overhead of hosting open-source tools like Langflow or n8n, Stack AI is a go-to solution.

It is a SOC2-compliant, fully hosted platform designed for Product Managers who need to ship production-ready backends instantly. It abstracts away the infrastructure entirely, focusing purely on the prompt chain and data connections.

Agentic AI Frameworks | Enterprise Platforms

To fully understand the landscape of Agentic AI, one must look beyond open-source libraries like LangChain and CrewAI. While those tools offer granular control, they often require significant engineering overhead to manage infrastructure, security, and scaling.

Enterprise Platforms represent the “Managed Services” layer of the agentic stack.

They are important because they solve the “Day 2” problems of deployment:

- Governance,

- Identity Management,

- Serverless Scaling.

For large organizations, these platforms are often the bridge that turns a fragile Python prototype into a compliant, production-grade business application.

Here is how the two cloud giants are approaching agent architecture:

Google Vertex AI Agent Builder

Vertex AI Agent Builder is Google’s full-stack platform designed to build, scale, and govern enterprise agents directly on top of Gemini models and your existing Google Cloud Platform (GCP) data.

It differentiates itself with a comprehensive Agent Development Kit (ADK) that unifies orchestration with production-grade observability and governance.

In most cases, it is the best choice if your organization is already invested in the GCP / BigQuery ecosystem.

It is ideal for building internal copilots, customer support agents, and data analysis agents where strict security controls and native data integration are non-negotiable.

Amazon Bedrock Agents

Amazon Bedrock Agents allow developers to define autonomous agents that utilize foundation models, APIs, and proprietary data to execute complex multi-step tasks.

With the introduction of AgentCore (GA in Oct 2025), Amazon provides a dedicated agentic infrastructure to build, run, and monitor agents at scale without the need to provision servers.

A strong choice for teams building on AWS who want managed security, scaling, and observability without running their own orchestration layer.

It is particularly notable for its native support of Model Context Protocol (MCP) based tools, allowing for standardized connections to external data systems.

Agentic AI Frameworks | 2026 Predictions

According to Gartner, 40% of enterprise applications will feature embedded task-specific agents by 2026 (up from less than 5% today).

Here are the three trends that will define the next 12 months:

- The “Agent-to-Agent” (A2A) Economy – In 2025, we’ve talked to agents. In 2026, agents will talk to agents. We will see the rise of “headless” marketplaces where a Marketing Agent (running on CrewAI) automatically hires a Research Agent (running on AutoGen) via the Model Context Protocol (MCP) to verify facts without human intervention.

- From RAG to LAMs (Large Action Models) – Retrieval-Augmented Generation (RAG) solved the “knowledge” problem. The next frontier is the “action” problem. Frameworks will evolve to support Large Action Models (LAMs) – systems capable not just of reading a PDF, but of clicking buttons, filling forms, and navigating complex UI on your behalf.

As “Agent Sprawl” becomes a security risk, IT departments will also mandate centralized control planes.

The “Wild West” of deploying rogue Python scripts will end. Platforms like Azure AI Agent Service and Salesforce Agentforce will become the mandatory gatekeepers for enterprise deployment, forcing developers to build compliant, observable agents by default.

Agentic AI Frameworks | Summary

At Flobotics we think 2026 is going to be the end of the “Proof of Concept” era.

The industry is not asking anymore: “Which LLM should I use?”, but rather “Which Framework to choose?”. And that’s a big shift.

All surviving AI frameworks – whether heavyweights like LangChain or specialized standards like MCP – will eventually share one common trait:

Reliability.

But, transition from passive Chatbots to active Digital Workers requires a robust architectural foundation. Even the “best” frameworks still depend entirely on your engineering culture, existing tech-stack, and governance needs.

If you’re about to choose a future-proof agentic AI framework, prioritize those offering:

- Observability (to see what the agent is doing),

- Control (to stop it when it errs),

- Interoperability (to switch models when the next GPT-5 arrives).

The future belongs to those who can govern their AI.

Looking for an AI automation partner?

Let’s talk!