Before You Read:

Tool Agents

Single-purpose, prompt-driven solutions powered by Large Language Models (LLMs) or other AI models. They can summarize, classify, extract, or generate text – but they do not act on their own. Every step requires human prompting or orchestration (Human in the loop).

Agentic Workflows

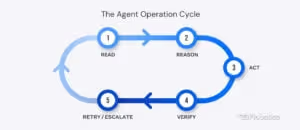

Automations that combine task interpretation with multi-step execution. Scalable and consistent, but limited to predefined paths. They read, use data (via APIs or AI tools), and follow structured sequences within specified guardrails. AI Workflows can’t self-direct beyond their design.

Autonomous Agents

It’s more of an idea than an actual solution. No fully general, unsupervised autonomous agent exists yet. What we call ‘autonomous agents’ today are advanced but still constrained agentic workflows with guardrails and human oversight – but they don’t have open-ended, self-directed autonomy.

What Is Agentic AI?

So far, we’ve all heard about ChatGPT-style automations – generative AI tools that summarize notes, generate text or images, and provide likely answers to a question. Sora, Claude, Perplexity, they all share one defining trait: they wait for your instructions.

Most of what gets labeled “agentic” today actually sits much lower on the intelligence scale than people think. Even though they seem intelligent, their reasoning is mainly statistical, not conceptual.

Agentic AI is a much broader term.

Agentic AI is the first generation of software capable of pursuing goals and automating multi-step workflows end-to-end with limited supervision – not just answering questions, but actually doing the work inside the guardrails you set.

Until recently, AI could generate text or images but couldn’t take action. Even with prompts, plugins, and rule layers, today’s AI is still nowhere near a fully autonomous agent

It’s the slice of AI that can take a goal, figure out the steps, act across your stack – and finish the job inside the guardrails you set.

It simply sits between “autocomplete with extra steps” and “general intelligence” – close enough to the present to be useful, far enough from AGI to stay controllable.

A simple way to think about the landscape is in four levels:

- RPA & minor automations,

- Tool agents,

- Agentic workflows,

- Fully autonomous agents.

If you’d like to learn more about this topic, feel free to read our article: “Agentic AI vs. RPA“

What’s not Agentic AI?

It’s important to draw a clear line between what’s agentic and what’s not.

Most of what’s called “agentic” today actually lives in the middle: agentic workflows plus task-focused agents wired into real tools and real data. They can be impressively capable, but they operate inside guardrails. They don’t understand the world, they don’t have stable long-term memory or true causal reasoning, and they don’t run your business on their own.

A lot of confusion comes from blurring these lines:

- Prompt-driven tools (ChatGPT in a browser, image generators) are not agentic.

- Dashboards, analytics, and simple integrations are not agentic.

- RPA bots that click through portals are not agentic.

All of them can be ingredients in an agentic architecture – but they only become agentic when you add goal-orientation, planning, tool use, state, and the ability to act across systems with limited supervision.

Traditional RPA is not an agentic AI.

These are the classic bots that follow fixed rules, click through portals, copy and paste values, or run scripts. They are fast and reliable, but completely rigid: the moment a screen changes, a field moves, or an exception appears, they break.

They perform actions, but they cannot decide which actions to perform, and that alone disqualifies them from the agentic category.

Prompt-driven generic tools are also not agentic.

Traditional tools like ChatGPT–in–a–browser generate text or images.

They are smart, fluent, and useful, but fundamentally passive.

They wait for instructions, produce summaries, extract data, classify documents, or generate text and images. They provide output, but they do not take initiative. They cannot plan, sequence steps, or complete workflows unless a human orchestrates every move.

Agentic AI uses the same intelligence but connects to tools, APIs, calendars, drives, CRMs, and apps – so it can actually execute work.

Even API-based automations fall short of being agentic.

Integrating systems, syncing data, or triggering simple workflows is valuable, but it lacks any reasoning layer. These systems move information from A to B, but they don’t interpret, adapt, or decide what to do next. They are connective tissue–not decision-makers.

The same goes for dashboards, analytics platforms, and “AI insights” tools.

They can measure, predict, and visualize, but they cannot act. They inform the operator but they don’t replace the operator.

And how about Artificial General Intelligence (AGI)?

It is not agentic AI – yet.

Just like autonomous agents they require missing ingredients like: long–term memory, causal reasoning, stable identity, and generalizable understanding

The reason AGI feels closer today and the reason people keep saying it’s “around the corner” is not because we built a breakthrough model. It’s because AI systems now behave in ways that resemble early forms of agency. They plan. They act. They revise. They even collaborate with other agents.

These are not signs of general intelligence, but clearly show that we’re building architectures that look suspiciously like the skeleton of something more capable.

AGI isn’t imminent, but it feels closer because the structural pieces that would support AGI – planning, tool use, memory, multi–step reasoning, coordination – are now being built for agentic systems.

This is why the conversation gets interesting: the leap from today’s semi–autonomous agents to fully autonomous agents is almost the same leap as the one from fully autonomous agents to AGI.

The barrier in both cases is the same set of unsolved problems:

- How do you give a machine stable memory?

- How do you make it understand a context that evolves across time?

- How do you let it pursue goals without drifting into unsafe behavior?

- How do you balance autonomy with control?

- How do you give it the ability to generalize the way humans do effortlessly?

So far, humanity hasn’t solved any of that.

In short:

Agentic AI is not everything that uses AI but it can use all of the digital assets if structured properly.

Common Agentic AI Misconceptions

People see AI generate fluid explanations, clinically styled summaries, or step–by–step reasoning and assume the system actually grasps meaning the way a human does.

It doesn’t.

Here’s a bunch of misconceptions about agentic ai we often hear online:

“AI understands / knows everything.”

Modern models simulate understanding by predicting patterns across billions of examples. They don’t build mental models, they don’t interpret cause and effect, and they don’t know anything in the human sense of knowledge.

This misconception inflates both trust and fear – teams overestimate AI’s autonomy while underestimating the need for oversight.

Believing AI “understands” invites unsafe delegation and misplaced expectations, especially in workflows involving clinical nuance, legal constraints, or payer–specific rules that require actual reasoning.

“Fluent language equals real comprehension.”

Fluent language output triggers a cognitive bias:

if it talks like us, it must think like us. But fluency is a surface feature, not a sign of cognition.

This leads professionals to trust AI–generated explanations too quickly, assuming a coherent paragraph means the model reasoned its way to the answer. In reality, the model is generating the most statistically likely continuation.

This misconception is dangerous because it creates a false sense of expertise – especially in complicated RCM scenarios where terminology looks authoritative but correctness depends entirely on context the model might not actually understand.

“AI model that writes like a doctor reasons like one.”

Medical–style language does not imply medical reasoning. Yet many clinicians and administrators fall into this trap.

When an AI writes a clean eligibility interpretation or a compelling denial explanation, it’s easy to forget it lacks the domain experience that informs real decision–making. The risk is that organizations rely on AI as an authority rather than a tool – letting stylistic confidence mask conceptual shallowness.

In RCM, this can lead to incorrect appeal logic, misunderstood payer rules, or confidently wrong benefit interpretations.

It’s mistaken that AI possesses context, intentions, or understanding instead of patterns.

Deep learning models thrive on pattern recognition, not situational comprehension. They don’t understand intentions or context beyond what appears in the prompt.

This becomes a pain point when teams expect AI to “know” institutional rules, payer idiosyncrasies, task history, or organizational policy unless explicitly provided. Without structured memory and guardrails, AI forgets everything between interactions.

Many failures in deployed systems come from expecting AI to “get it” without being given the necessary data every single time.

“AI can operate fully on its own without guardrails.”

Executives often expect a single AI system to run autonomously across complex operations, making safe and correct decisions indefinitely. But contemporary models require policy layers, identity controls, auditing, escalation logic, error monitoring, and human oversight.

Without guardrails, AI becomes unpredictable.

Autonomous capacity exists only within tight boundaries – outside them, workflows collapse or produce silent errors. Assuming full autonomy too early leads to failed deployments and loss of trust internally.

“There’s an expectation that AI evolves into autonomy without design.”

Many leaders assume agents will “naturally” learn to handle new workflows or complexities over time. But AI does not self–teach new domains or build its own operating procedures. Autonomy isn’t emergent – it’s engineered.

Without deliberate design, updates, and guardrails, AI simply repeats patterns from its training or reinforcement loops. This misconception leads to disappointment when early experiments don’t scale across departments or when performance plateaus.

“AI is just a fancy autocomplete for the big companies.”

This misconception comes mainly from engineers who interacted with early generative models and froze their understanding at that level.

They believe AI outputs are shallow surface–level continuations, and therefore dismiss its ability to plan multi–step tasks, reason across documents, or coordinate actions.

Underestimating AI’s capabilities delays adoption and keeps organizations stuck in manual work long after automation could have replaced it.

“Modern models cannot truly plan or reason.”

That’s also not quite true.

Many assume AI cannot connect steps or maintain logic across multiple actions.

But contemporary systems, when combined with tool access and state tracking, can perform multi–step planning at a surprisingly operational level: retrieving data, interpreting rules, generating sequences, verifying work, and adjusting on the fly.

Underestimating this makes organizations blind to automation opportunities hiding in workflows assumed to be “too complex” for AI.

“AI must deliver flawless accuracy to be useful.”

Executives often set unrealistic expectations: 99%+ accuracy, zero variance, zero hallucinations.

But even we don’t operate at these levels either.

AI only needs to be consistent and scalable – not perfect.

A system that performs at 85–95% accuracy continuously can produce more reliable output than a human team fatigued by volume. Demanding perfection blocks adoption that would have delivered enormous ROI even at human–level error rates.

“Errors mean the system is unfit for production.”

In probabilistic systems, occasional errors are normal. With proper tuning, monitoring, and escalation paths, AI delivers strong outcomes despite variance.

But many teams treat every mistake as catastrophic instead of expected, preventing iteration and improvement.

“Intelligence alone can overcome chaotic inputs.”

Teams assume AI will “figure out” inconsistent payer data, outdated forms, or messy clinical notes through sheer intelligence.

But variability, not cognition, is the real obstacle. AI can handle complex reasoning, but not fragmented data pipelines without process redesign.

Intelligence can’t compensate for operational chaos.

“AI needs constant supervision”

Yes, in the same way humans do: periodic QC, exception review, model updates, guardrail tuning, and monitoring. It does not need micromanagement, but it does require oversight.

“AI is safest when isolated.”

Some organizations try to confine AI to sandboxes that have no access to real systems.

This avoids risk but also prevents value. AI that cannot act is just a sophisticated report generator. Useful AI requires controlled but meaningful system access, not total containment.

“AI learns automatically once deployed.”

A widespread fantasy is that AI improves simply by being used.

Real improvement requires structured feedback, retraining, memory systems, or human reinforcement. When organizations expect self–evolving behavior, they misinterpret stagnation as failure.

“AI will eliminate human jobs entirely.”

This fear slows adoption and increases internal resistance.

In reality, AI removes the repetitive burden around staff, allowing teams to focus on exceptions, complex judgment, and patient–facing impact. The work shifts; the workforce doesn’t disappear.

“AI will replace entire departments immediately.”

On the opposite side, some executives assume they can remove headcount rapidly.

But AI requires supervision, exception handling, and governance. Overestimating how fast automation replaces staff leads to unrealistic timelines and failed deployments.

“AI can be dropped into any workflow instantly.”

Teams expect plug–and–play automation regardless of messy inputs.

But AI needs clean interfaces, structured prompts, reliable data, and corrected upstream processes to perform well. Poor inputs produce poor outputs, no matter the model.

“Why do AI implementations fail?”

Most failures arise from misconceptions, not from model limitations:

- treating AI like deterministic software

- expecting perfection on day one

- isolating AI instead of integrating it

- misunderstanding the difference between tools and agents

- expecting autonomy without guardrails

- avoiding process redesign

- skipping supervision and monitoring

The technology works – adoption of frameworks not always.

If you’d like to learn more about it, feel free to read our article on Agentic AI Frameworks

What Is Agentic AI? | Summary

Until recently, AI could generate text or images but couldn’t take action. Even with prompts, plugins, and rule layers, today’s AI is still nowhere near a fully autonomous agent

A simple way to think about the landscape is in four levels: RPA & minor automations, Tool agents, Agentic workflows, and Fully autonomous agents.

If you remember one thing from this 101, make it this:

Agentic AI is not everything that uses AI.

It’s the slice of AI that can take a goal, figure out the steps, act across your stack – and finish the job inside the guardrails you set. It simply sits between “autocomplete with extra steps” and “general intelligence” – close enough to the present to be useful, far enough from AGI to stay controllable.

Time is revenue. Don’t lose either.

Let’s talk!

.jpg)